regularization machine learning meaning

Regularization refers to techniques that are used to calibrate machine learning models in order to minimize the adjusted loss. Hence it tries to push the coefficients for.

What Is Regularization In Machine Learning Techniques Methods

It is a technique to prevent the model from.

. Part 1 deals with the theory. Regularization in Machine Learning What is Regularization. The ways to go about it can be different can be measuring a loss function and.

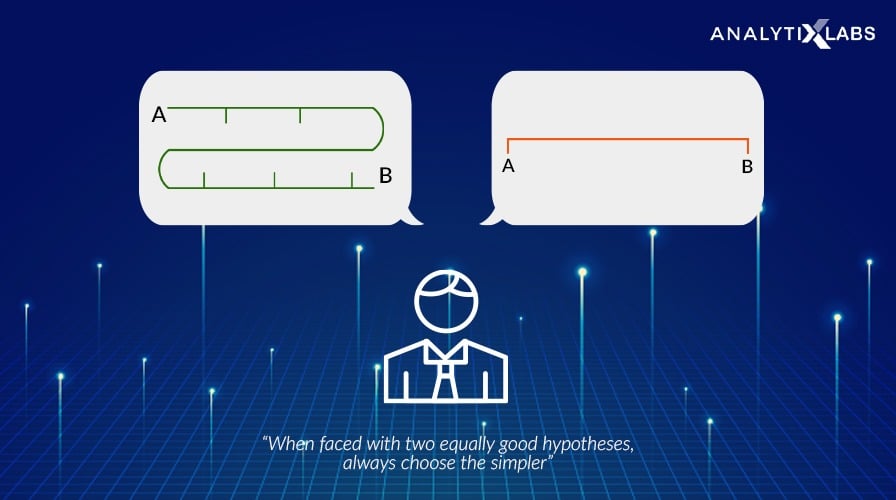

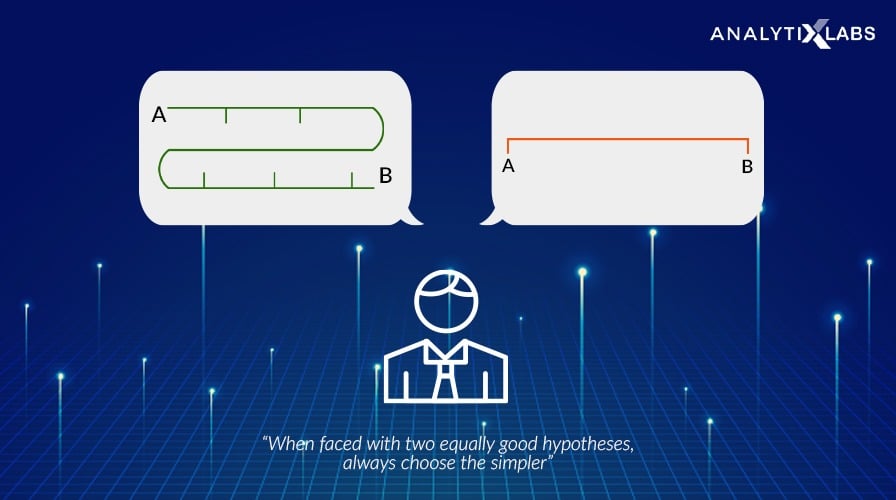

Regularization is an application of Occams Razor. We all know Machine learning is. Setting up a machine-learning model.

In machine learning regularization is a procedure that shrinks the co-efficient towards zero. Regularization helps us predict a Model which helps us tackle the Bias of the training data. This is an important theme in machine learning.

In the context of machine learning. Regularization is one of the most important concepts of machine learning. However we have the knowledge that the performance of the model can be increased by applying certain improvement methods These methods are called as regularization methods.

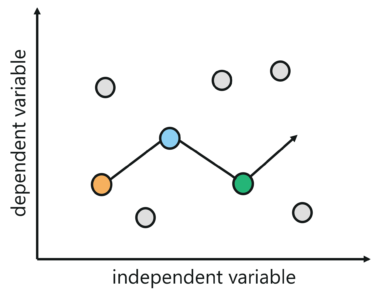

It is very important to understand regularization to train a good model. In general regularization means to make things regular or acceptable. Red curve is before.

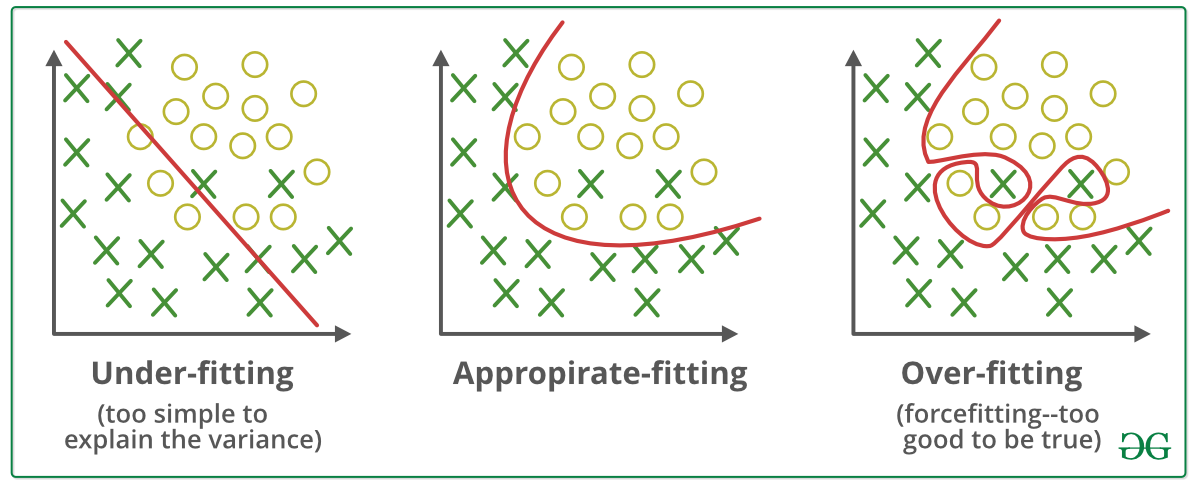

This is exactly why we use it for applied machine learning. Regularization is necessary whenever the model begins to overfit underfit. Regularization is essential in machine and deep learning.

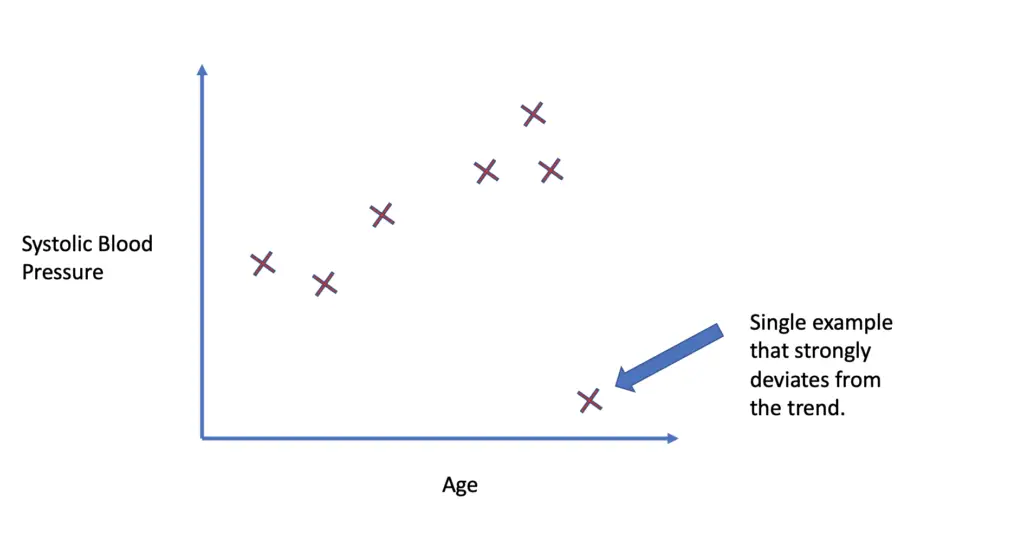

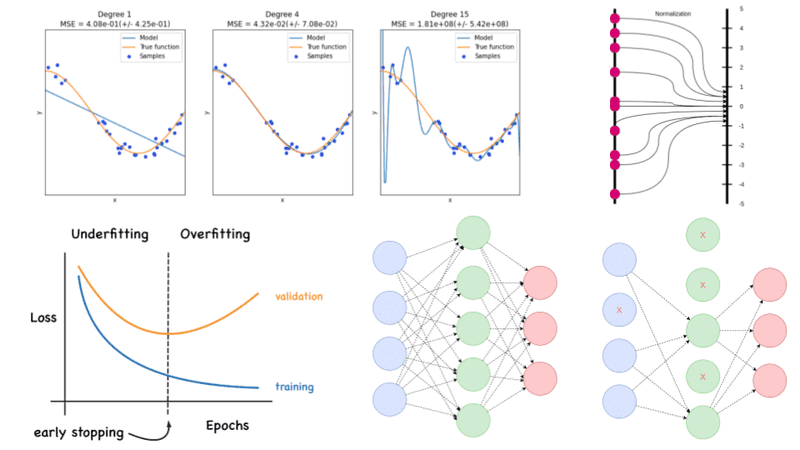

We already discussed the overfitting problem of a machine-learning model which makes the model inaccurate predictions. Both overfitting and underfitting are problems that ultimately cause poor predictions on new data. It is possible to avoid overfitting in the existing model by adding a penalizing term in.

This is where regularization comes into the picture which shrinks or regularizes these learned estimates towards zero by adding a loss function with optimizing parameters to. Regularization in Machine Learning is an important concept and it solves the overfitting problem. The regularization term is probably what most people mean when they talk about regularization.

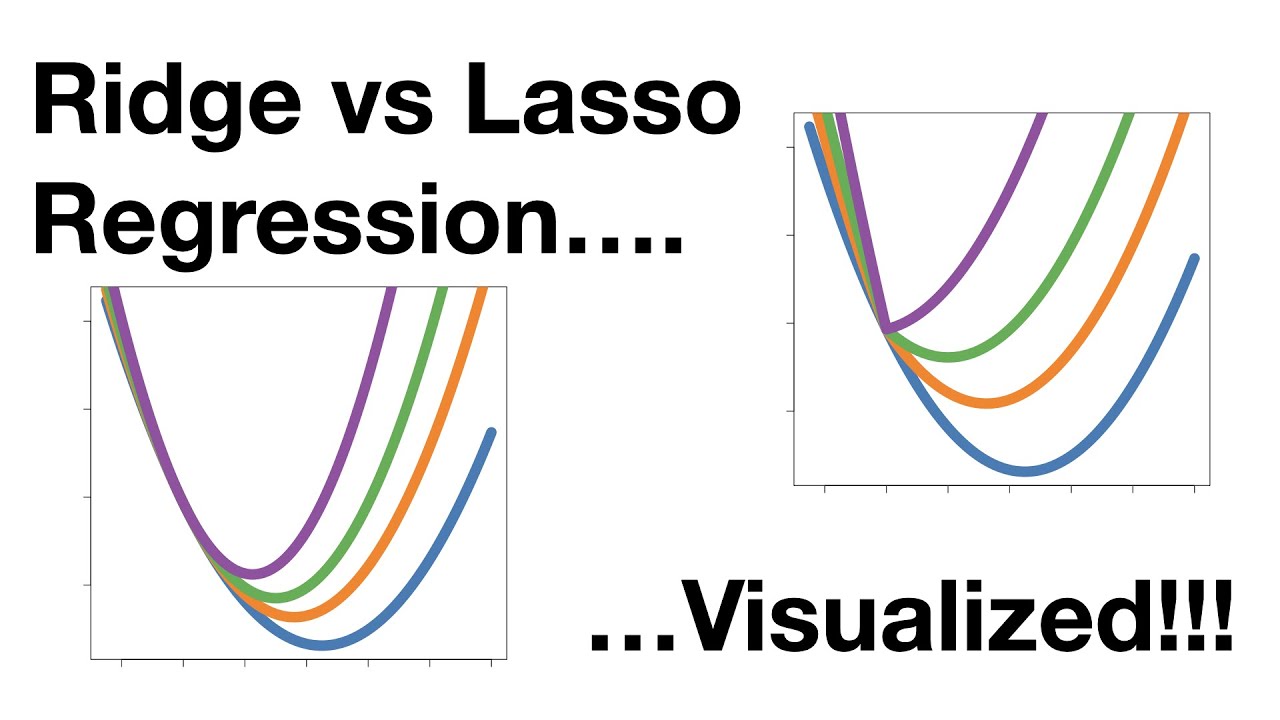

This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero. It is a cost term for bringing in more features with the objective function. I have covered the entire concept in two parts.

Regularization in Machine Learning greatly reduces the models variance without significantly increasing its bias. The regularization techniques prevent machine learning algorithms from overfitting. Regularization is a method to balance overfitting and underfitting a model during training.

It will affect the efficiency of the model. Regularization is one of the basic and most important concept in the world of Machine Learning. Regularization refers to the collection of techniques used to tune machine learning models by minimizing an adjusted loss function to prevent overfitting.

For understanding the concept of regularization and its link with Machine Learning we first need to understand why do we need regularization. Regularization is one of the techniques that is used to control overfitting in high flexibility models. It is one of the key concepts in Machine learning as it helps choose a simple model rather than a complex one.

In other words this technique discourages. Regularization is a method of rescuing a regression model from overfitting by minimizing the value of coefficients of features towards zero. In other terms regularization means the discouragement of learning a more complex or more.

What is Regularization in Machine Learning. As a result the tuning parameter determines the impact on bias and. It is not a complicated technique and it simplifies the machine learning process.

It is a term that modifies the error term without depending on data.

Regularization In Machine Learning Regularization In Java Edureka

Regularization In Machine Learning Geeksforgeeks

Regularization In Machine Learning Regularization In Java Edureka

Regularization Of Neural Networks Can Alleviate Overfitting In The Training Phase Current Regularization Methods Such As Dropou Networking Connection Dropout

Implementation Of Gradient Descent In Linear Regression Linear Regression Regression Data Science

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

Machine Learning For Humans Part 5 Reinforcement Learning Machine Learning Q Learning Learning

Regularization Techniques For Training Deep Neural Networks Ai Summer

A Simple Explanation Of Regularization In Machine Learning Nintyzeros

Learning Patterns Design Patterns For Deep Learning Architectures Deep Learning Learning Pattern Design

Tf Example Machine Learning Data Science Glossary Machine Learning Machine Learning Methods Data Science

What Is Regularization In Machine Learning Techniques Methods

Difference Between Bagging And Random Forest Machine Learning Learning Problems Supervised Machine Learning

Regularization In Machine Learning Simplilearn

Regularization In Machine Learning Programmathically

Regularization In Machine Learning Simplilearn